Deleting a large amount of data from the MS SQL table

From time to time there is a need to remove a large amount of data from the MS SQL table. By large amounts, I mean that the table contains a few million rows and it occupies a few gigabytes of the storage.

Why deleting from such a table can be an issue? There are two main reasons:

- during the delete operation, the table is locked so the selects, updates, and inserts are waiting

- the delete operation is saved in the database log – the database engine should be able to revert the operation if there is an issue

Running the delete query on such a table can take minutes, consume a large part of the memory and it may lead to issues with the applications running on the same database.

What are the ways you can choose to prevent such issues?

Truncate instead of delete

If you want to empty the whole table, you should consider the truncate query instead of delete. Truncate operation empties the table and is not saving the removed data into the recovery log. It is also pretty quick. The issue is that it removes all records from the table – there is no way to remove only some of them. But, there is a way…

Truncate with backup of the important rows

If you want to keep only a small part of the table and remove all other records, you can first copy the data that should be kept. Copy it into a temporary table. Once copied, you can truncate the “large table”. Once truncated, you can copy the data back. This method is also rather quick but requires more steps. Also, for some time there is no data in the “large table” so if your application is using it for some reason, it is not the way to go.

The looped partial delete statement

The method I’m using in case I need access to the data is the looped partial delete. Instead of the “delete all unused rows in one go” I’m performing a set of small delete statements. You may as why? Each statement is considered a separate query. This means that the selects, updates, and inserts that are waiting, can be executed “in the meantime” – between the execution of each delete statement. The database engine is taking care of the proper CPU time management.

The small delete statements also require a smaller amount of memory to execute and the log entries are smaller. Of course, the whole log history for the whole delete will not be smaller, but it will be logged in smaller chunks.

This means that the application that is using the database will work properly, while the delete operation is performed “in the background”. So, how the looped partial delete looks like? Here is the query I’m using to perform this operation:

IsRepeat:

DELETE top(5000) FROM my_large_table WHERE year(recorddate) < 2019

IF @@ROWCOUNT > 0

GOTO IsRepeat

The core of the query is the delete statement. Please note that I’m using a regular delete query with the where statement. The difference is that I’m asking the engine to delete only the top 5000 rows. Once the 5000 rows are reached, the query stops. The number of affected rows is stored in the @@ROWCOUNT variable. The “if” statement is checking if there were some rows affected and if there were, the “goto” statement jumps to the beginning of the loop. Once all unused records are removed, the @@ROWCOUNT variable value reaches zero. The “if” statement condition is not met and the whole loop ends.

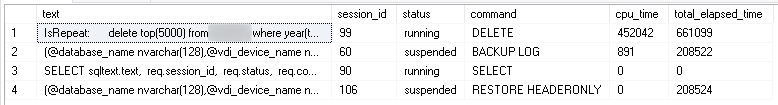

How does it look like on the list of running sessions? Let’s take a look at the table below:

As you can see, the CPU time for the delete loop is about 70% of the total elapsed time. This means that “in the meantime” the engine is taking care of other queries and they are not waiting locked by the delete operation. In the example below, there is even the log backup performed in the background. The application queries are not listed, because they are not waiting in the queue long enough.

In your case, you should check what value in the “top” part of the delete statement works properly. In my example, I used 5000 rows, because in most cases such a delete statement takes no more than half a second to execute. This means that every half second, locked queries are able to run. In your case, different values can be used. You can check the smaller number if it works for you, but you can also try the larger. As a rule of thumb, the delete should not take more than a few hundred milliseconds if you want to keep your application running properly. If the database is heavily loaded, the small limit, such as 100 rows can also be considered.