Basic text processing in Linux

In my case, text processing is mostly reviewing logs and looking for things that are stored in them. Sometimes dig through the Git repository or trying to find a setting in configuration files. In such cases, I use a set of easy and effective commands. This is basic knowledge, but also something that slips out of your memory if you are not using it frequently. Thus the article – remind us what can be used and how.

Finding out what’s in the file – tail and head

Before I even start to dig into files, sometimes I simply want to check what is in there. The ‘tail’ and ‘head’ commands are great in this field. ‘Head’ displays the begging of the file, while ‘tail’ displays the last lines of it. The usage of both is almost similar. The very basic usage is:

head access.log tail access.log

The above commands will display the top 10 lines of the file (head) and the last 10 lines of the file (tail).

If you want to limit the number of lines, you can simply add parameter of ‘-n’ like this:

head -n 5 access.log tail -n 5 access.log

In the example above, the limit is set to 5 lines in each case. If you want, you can display multiple files at once:

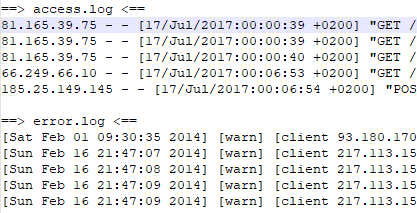

head -n 5 access.log error.log tail -n 5 access.log error.log

Now, we will see 5 lines of ‘access.log’ followed by 5 lines of ‘error.log’ in both cases. They are clearly distinguished to show which file is the source of data:

tail -f access.log

This one will display the last 10 lines of the file but will stay executed and will display data appended to the file as the file grows. In the above case, you can also provide multiple file names and watch them simultaneously.

Selecting what to see – grep

Grep is a command-line utility for searching plain-text data sets and files for lines that match a regular expression. I use it to filter log files and to look for things. For instance:

grep "17/Jul/2017" access.log

will display only lines containing the date of 17/Jul/2017. This way I can limit data to a particular day or particular IP. Sometimes I use it to find a particular page calls:

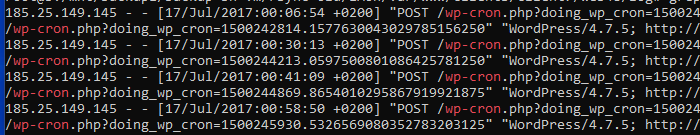

grep "wp-cron" access.log

grep -v "127\.0\.0\.1" access.log

The above will remove from the output all the lines that contain the IP of “127.0.0.1”. Have you noticed that all dots are escaped? This is because the dot is treated as “any character” and we want to focus on a particular IP address, not strings that contain its parts.

When using grep, we can search through multiple files:

grep "127\.0\.0\.1" *.log grep "127\.0\.0\.1" *.log -r grep "my setting" /etc/* -r

The first one will search for the IP of “127.0.0.1” in all files matching the pattern of “*.log” in the current directory. The second one will do the same, but also dig recursively in the subdirectories. The third one will search for “my setting” in all files in the ‘/etc/’ directory and subdirectories. The output of these commands will contain not only the lines but also the name of the file.

Displaying part of the line

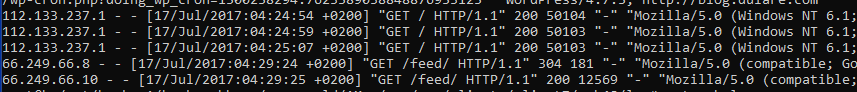

When I want to focus on a particular part of the log file lines, I use ‘cut’ to split the line into fields and display only a subset of them. For instance, we have such a log file:

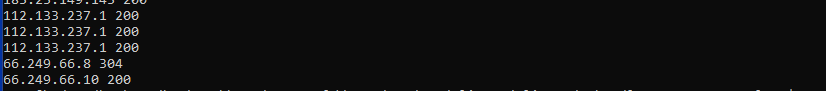

cat access.log | cut -d" " -f1,9

which will result with:

The commend above contains two things: first is ‘cat access.log’ which is simply outputting the contents of the ‘access.log’ and the second is ‘cut -d” ” -f1,9’ which is splitting lines by delimiter of ” ” (space) and displaying field number 1 and 9 (IP address and response code). The ‘|’ in the middle is “pipe” which is directing the output of the first command to the input of the second one. This way we can connect multiple commands to create more complex processing pipeline.

Count the number of lines

When I want to check if I should review results one by one or find a better way, I’m simply counting the number of lines. Especially when I search for something using ‘grep’ and I want to check how big is the outcome:

grep "my setting" /etc/* -r | wc -l 1528

The above is searching for “my setting” in all files in the ‘/etc/’ folder and its subdirectories, the lines containing the search phrase are then sent to the ‘wc -l’ which is counting them. ‘wc’ stands for ‘word count’ but with the parameter of ‘-l’ it is counting lines instead. When I see the result such as here – over 1.500 lines – I think of a better way of reviewing results than looking at them line by line 🙂